All production bugs passed through your test suite - here's mine

Discussion on LinkedIn, BlueSky

In Simple Made Easy, Rich Hickey has this quip about bugs.

What's true of every bug found in the field? It got written. What's a more interesting fact about it? It passed the type checker. What else did it do? It passed all the tests.

And I would add

And where did you find out about the bug? In

prod.

So here's one of my bugs I wrote that made it in front of a user. (The only user is my boss. Dunno if that makes it better or worse.)

A simple reporting tool

At work, I've been working on this internal reporting tool.

We use GitHub issues and projects for managing our tasks. And we're interested how long it takes us to go from an issue entering the board (it's officially a to-doable thing) to when it's closed (i.e. code is hitting main). This number is also known as the lead time.

The tool I worked on is no rocket science, the routine usage pattern goes like this

- Fetch all of the issues on the board

- Figure out the

start_dateanddone_dateof each issue - The difference is your lead time

- For each issue type (based on a label), spit out the minimum and maximum lead time, and number of issues (

min,max,sample_size)

Bug: Issues converted to a discussion don't have a ClosedEvent

To figure out the start_date and done_date, we use a simple heuristic.

start_date: Assign the issue'screated_atas thestart_date.done_date: Get the issue timeline filtered to closed events. If there's more than one, pick the oldest.

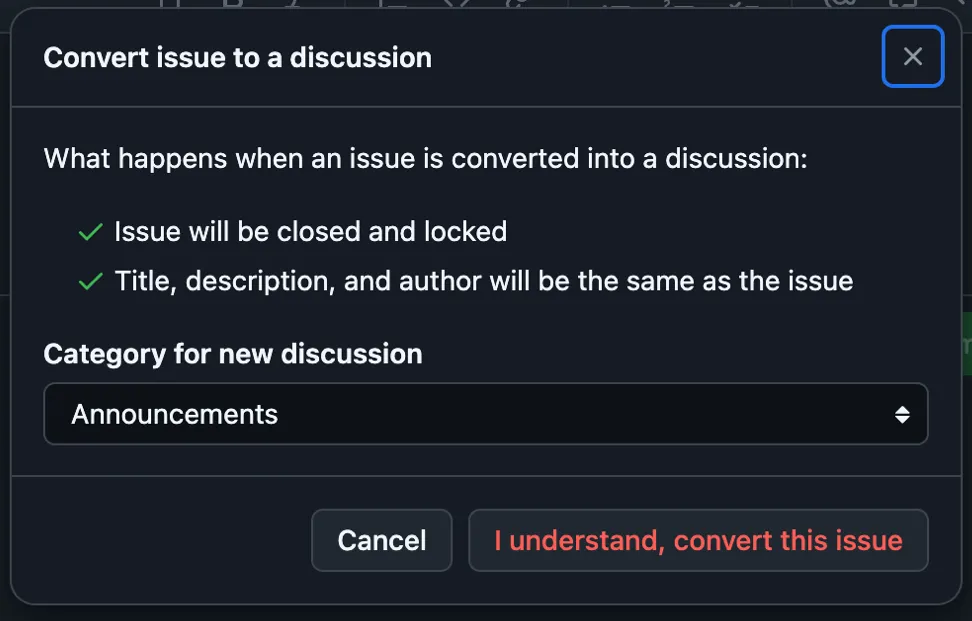

The bug was in the infer_done_date function. It turns out, when you convert a GitHub issue into a discussion, it's closed but doesn't have a ClosedEvent. It has a ConvertedToDiscussionEvent.

That makes good sense! But I didn't think of it.

And since converting issues to discussions is quite rare in our workflow, the tool was running fine for non-insignificant amount of time before this happened.

Reflections

In terms of testing, the function is two API calls 1) get me the closed events, 2) update the done_date with the closed event's createdAt date. I find that a PITA to test. When you mock both calls, you're not testing anything. When you don't mock it, you have yourself an integration test to an external API and that didn't feel worth it for such a tiny tool.

What I think I could've done is RTFM and take note of the different kinds of events that can occur on the timeline. Rather than just cherry-picking the "happy path" one.

On another note, it reminded me of the good old boring technology essay. Flyvbjerg and Gardner recommend similar caution around unproved technologies in How Big Things Get Done. They describe the tech as having "frozen experience". I like it because it carries some gravitas compared to "boring technology".

Either way, they are talking about the same thing - the failure modes of tech that has been in prod for longer are better explored. Including bugs written my yours truly.

How did you find this? Shoot me a comment on LinkedIn or BlueSky.